TL;DR

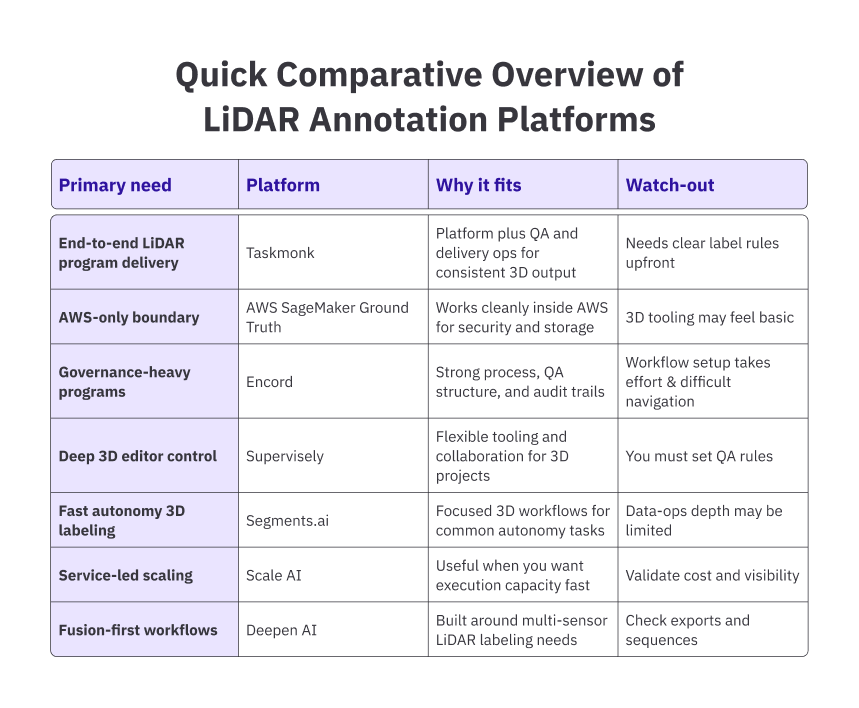

LiDAR labeling gets expensive when the tool forces manual work: weak sequence workflows, clunky review, or noisy exports. This guide compares five LiDAR annotation platforms in 2026 on 3D tooling, QA + review loops, automation, integrations, and scale.

Best LiDAR annotation Platforms in 2026:

- Taskmonk

- Scale AI

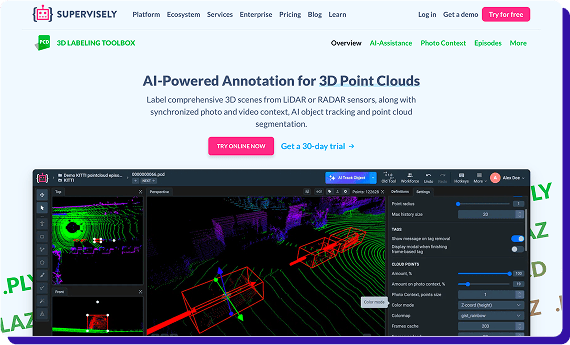

- Supervisely

- AWS SageMaker

- Encord

- Deepen AI

- Segments AI

Introduction

LiDAR annotation is one of those tasks that looks straightforward until you are doing it at scale. The moment you add multi-sensor scenes, long-range sparsity, tight class definitions, and review cycles, small inconsistencies turn into expensive rework and noisy training data.

In 2026, the best LiDAR annotation platform is not just a fancy 3D labeling UI. It is a robust system that helps you label faster without compromising accuracy, supports your real workflows, and makes quality measurable through review, audits, and clear exports. That is where platforms like Taskmonk win.

In this guide, we evaluate the best LiDAR annotation platforms in 2026, comparing them on 3D tooling depth, automation, quality controls, workflow fit, and pricing signals, so you can shortlist based on your use case, not marketing.

We will cover Taskmonk, Scale AI, Supervisely, AWS Sagemaker, Encord, Deepen AI, and Segments AI, and we will keep the focus on fit for real LiDAR annotation tasks.

How we curated this list

- Prioritized 3D point cloud coverage (cuboids, segmentation, vectors) and sequence tooling (tracking, ID consistency).

- Checked sensor fusion claims (LiDAR + cameras; calibration context) and basic performance at scene scale.

- Assessed QA patterns (review modes, consensus/maker‑checker, auditability) and export options (SDK/API readiness).

- Looked for cloud/security fit (AWS‑native, VPC options) and workflow integration.

- Considered pricing/TCO signals were public (task/unit economics, workforce options).

- Reviewed vendor docs and product pages; spot‑checked public reviews where available (G2/Docs/Blogs).

What is LiDAR Annotation?

LiDAR annotation is the process of labeling objects and structures in 3D point cloud data so that machine learning models can learn 3D perception. In practice, it includes tasks like 3D bounding boxes for vehicles, pedestrians, and cyclists, point-level semantic segmentation for roads and infrastructure, and lane or drivable-area labeling when needed.

Read more on LiDAR annotation in this LiDAR annotation 101 guide.

While ADAS and autonomous driving are the most common use cases, LiDAR annotation is also used in robotics and warehouse automation, mapping and smart cities, construction and surveying, rail and utilities inspection, and geospatial workflows for terrain and change detection.

Across all of these, the goal is the same: a consistent 3D ground truth that stays reliable across environments, sensors, and edge cases.

Best LiDAR annotation Platforms in 2026

LiDAR projects fail for the most trivial reasons: inconsistent box rules across labelers, sequence drift, weak QA, and exports that do not match your training stack.

The platforms below are shortlisted based on how well they help teams avoid those issues in production.

- Taskmonk

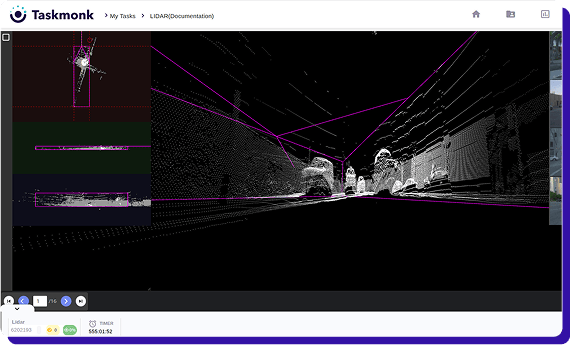

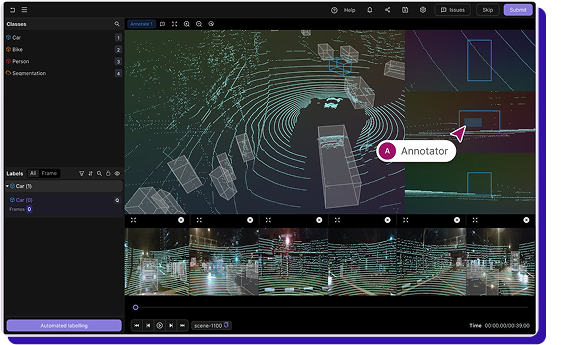

Taskmonk is built for LiDAR annotation programs, where the hard part is not drawing 3D boxes; it’s consistent output across weeks of production.

Taskmonk treats LiDAR annotation as a single production data pipeline, keeping labeling, review, and delivery in one loop, so teams do not rely on stitched tools for operations and QA.

It supports common 3D label types across single frames and sequences, utilizes practical automation through pre-labeling and review, and applies structured QA patterns with enterprise controls, including SSO, RBAC, and audit trails.

Where Taskmonk stands out for LiDAR annotation

- Multi-sensor fusion done properly: Combines LiDAR with camera and radar, with calibration, time sync, and multi-view playback for reliable geometry checks across scenes.

- Sequence consistency features: Persist object IDs across frames and support keyframes plus interpolation and propagation, so long sequences do not become repetitive manual work.

- 3D tooling beyond cuboids: Built for real point cloud work, like large scene handling, clipping, and visualization controls that make sparse and dense clouds workable.

- Full label types for road and mapping workflows: Supports cuboids, polylines for lanes and curbs, polygons for drivable areas, keypoints, and semantic or instance segmentation with attributes.

- AI assistance with review discipline: Uses pre-labels that labelers approve or correct, routes low-confidence items, and standardizes decisions using gold tasks, rubrics, and feedback loops.

- QA as a program, not a checkbox: Maker checker, consensus, rule checks, per-class KPIs, and audit trails so you catch drift early, trace it to a root cause, and correct it across the pipeline.

- Pipeline-ready ingest and exports: Supports importing single scans or long sequences from common cloud buckets, keeps timestamps and calibration context, and exports clean dataset versions via APIs.

- Enterprise controls plus delivery model: offers SSO and RBAC with VPC or on-prem options, and a POC-first approach with measurable KPIs. Organizations

Why shortlist Taskmonk- Program‑grade QA + delivery ops to keep weekly output consistent and measurable.

- Sensor‑fusion context and API‑ready exports for pipeline fit.

Best for: Organizations that want one owner for LiDAR labeling + QA + delivery with clear SLAs and production KPIs.

Not ideal for: DIY teams wanting only a standalone editor without ops involvement. -

Scale AI

Scale’s LiDAR workflow is built around API-driven sensor fusion tasks, where a scene can include point clouds plus 2D imagery, and supports outputs like 3D cuboids, point cloud segmentation, 3D keypoints, and top-down polygons. It also supports a practical dependency flow where segmentation tasks can be created from completed LiDAR annotation tasks, which helps reduce repetitive setup and rework.

Pricing is task-based and depends on task setup and the labeler response, so you should validate unit economics and quality controls early, since practitioners often raise questions about how Rapid workflows ensure quality and consistency at speed.

Where Scale AI stands out for LiDAR annotation

- Sensor fusion task setup for LiDAR plus 2D context, useful when boxes need camera confirmation.

- Broad autonomy label coverage, including 3D cuboids, segmentation, 3D keypoints, and top-down polygons.

- Workflow chaining where segmentation can start from completed cuboid runs, reducing repetitive work.

Best when you need execution capacity fast; validate unit economics and QA visibility. (API reference)

Evidence (public): API/docs describe sensor‑fusion workflows and task chaining; LiDAR‑specific third‑party quality reviews are sparse. -

Supervisely

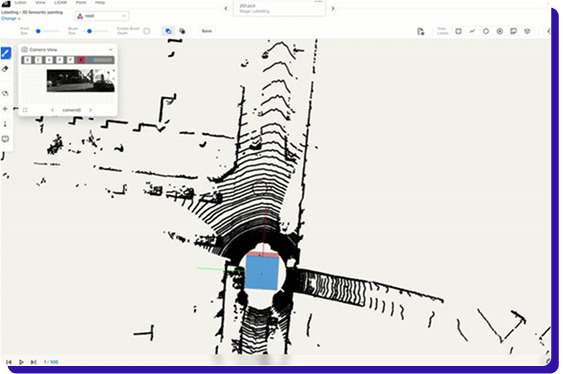

Supervisely is an editor-first platform with explicit support for 3D point clouds and tooling like cuboid and point cloud pen, aimed at detection and segmentation tasks, with documentation that also covers performance-minded handling of large point clouds.

In practice, users like the breadth of tooling, but a recurring downside is that the platform can feel overwhelming and harder to learn, especially for new users, so onboarding time is a real consideration.

If you shortlist it, the main risk is not capability, it is operational smoothness: make sure your team can enforce QA and keep reviewers productive without getting bogged down in complexity.

Where Supervisely stands out for LiDAR annotation

- Editor-first 3D tooling with strong control for point cloud labeling and segmentation-style workflows.

- Sensor fusion support for LiDAR with multiple cameras is helpful for tough boundary calls.

- Flexible project setup for teams running in-house labeling programs with custom processes.

Not ideal for: Teams seeking a light learning curve or baked‑in QA governance.

Evidence (public): Docs and user comments highlight robust LiDAR+fusion tooling and large scene handling; claims about steep onboarding/complexity are practitioner anecdotes. -

AWS SageMaker Ground Truth

Amazon SageMaker Ground Truth is an AWS-native labeling service that supports 3D point cloud annotation with built-in UIs and managed workforce options. It fits best when your data, security, and training pipelines already run on AWS.

A practical choice for teams that want an AWS-first setup for LiDAR labeling, especially when IAM controls, logging, encryption, and S3-based workflows are non-negotiable.

Where SageMaker Ground Truth stands out for LiDAR annotation

- AWS-native governance: IAM-based access, S3-first storage, and standard AWS logging and encryption patterns.

- 3D labeling plus sensor fusion: You can provide point cloud data and optionally sensor fusion data, and project labels between 3D and 2D imagery for higher-confidence annotation.

- Supports single frames and sequences: Object detection and semantic segmentation can be done per frame, and object tracking can be done across sequences.

- Workforce options: Use a private workforce or a vendor workforce from AWS Marketplace for 3D point cloud labeling jobs.

Not ideal for: Users expecting the deepest 3D editor controls or vendor‑agnostic pipelines.

Evidence (public): AWS docs confirm 3D point cloud + optional sensor fusion, tracking & segmentation jobs, and workforce options. Editor depth vs. specialists is a common inference → [Needs verification] with hands‑on trial. -

Encord

Encord is a recognized option for LiDAR annotation programs that also care about review structure, traceability, and governance.

It can be a good fit if you want 3D labeling inside a broader workflow and governance system. The tradeoff is that you’ll likely spend time configuring workflow states, reviews, and rules.

So it’s worth piloting to understand how reviewers handle long sequences, whether exports map cleanly into your training stack, and whether large scenes stay responsive at your real labeling volume.

It can work well for teams running 3D labeling alongside broader data workflow management, but you should pilot workflow setup effort, long-sequence reviewer experience, export cleanliness into your training stack, and performance on your largest scenes at your real labeling volume.

Where it stands out for LiDAR annotation

- 3D annotation coverage for common LiDAR labeling tasks across frames and sequences

- Sequence consistency features to keep objects stable over time

- Practical assistance patterns like seeding labels and routing harder cases for second reads

- QA structure to standardize decisions across labelers and reviewers

- Enterprise controls for access management and auditability across larger teams

Why shortlist Encord

- Mature process + audit trails for regulated or high‑governance environments.

- Works across 3D + broader data pipelines, reducing tool sprawl.

Not ideal for: Orgs that need a quick start with minimal workflow setup.

Evidence (public): Encord docs/blogs highlight ontology, review workflows, and auditability. Forum chatter notes setup effort; long‑sequence ergonomics/performance requires pilot. -

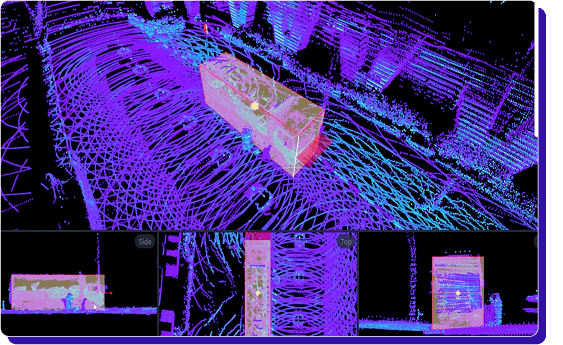

Deepen AI

Deepen AI is positioned specifically for multi-sensor LiDAR annotation and calibration, and their sensor-fusion LiDAR tooling lists support for 3D bounding boxes, semantic segmentation, polylines, and instance segmentation, with claims around handling very large point clouds.

A concrete limitation called out in an industry comparison is that point cloud workflows can be lighter on automated labeling than their 2D capabilities, and multi-sensor support is described as more limited than some specialist stacks, so you should validate automation depth and fusion workflow fit in your pilot.

It is best treated as a fusion-focused option where you confirm sequence reviewer ergonomics, exports, and how much manual work remains for point-cloud labeling at your real volume.

Where it stands out for LiDAR annotation

- Strong coverage of common LiDAR tasks like 3D boxes, polylines, and segmentation variants.

- Emphasis on calibration and multi-sensor setup, which matters when sync issues create label noise.

-

Segments AI

Segments.ai is a multi-sensor labeling platform built for robotics and autonomous driving, with 3D point cloud tooling that includes cuboids, segmentation, and vector labels (polylines, polygons, keypoints), plus merged point cloud and batch mode features aimed at faster sequence work.

It can work well when you want to label 3D and 2D together and export through an SDK or API, but you should pilot the learning curve for multi-sensor workflows and check whether documentation and semi-automatic assistance meet your team’s expectations, since users call out onboarding friction and gaps.

Where it stands out for LiDAR annotation

- Batch-oriented features are designed to speed up repetitive labeling and review across scenes.

- API and SDK-style exports that suit teams building repeatable training data pipelines.

- Multi-sensor labeling with 3D and 2D views together, reducing ambiguity during review.

Best for: ML engineers in autonomy/robotics who want speed with pragmatic tooling across 2D+3D.

Not ideal for: Heavy enterprise governance needs or deep QA programs out‑of‑the‑box.

Why Taskmonk often wins for high‑risk LiDAR programs

When consistent, week‑over‑week LiDAR output matters, Taskmonk aligns an intuitive & robust 3D editor with program‑grade QA and delivery so drift is caught early and fixed.

It’s frequently chosen when teams want one accountable owner for labeling + QA +validation+ exports, not a stitched toolchain. If you need a production‑ready pipeline and measurable quality, shortlist Taskmonk first.

Head: See how Taskmonk runs LiDAR programs end‑to‑end.

CTA text- Book a tailored walkthrough

Conclusion

LiDAR labeling is expensive in the places you cannot see on a demo: sequence drift, weak QA, and exports that do not line up with training.

Shortlist platforms based on sensor fusion fit, temporal consistency, and how measurable quality is across reviewers, then pilot on your hardest scenes at your real labeling volume.

Read this detailed guide to help yourself in choosing data labeling platform.

If you want one system that combines LiDAR tooling with production-grade QA and delivery ops, Taskmonk is the safest baseline to evaluate first.

FAQs

-

Which LiDAR annotation platform is best for autonomous vehicle startups?

The best fit is usually the platform that balances cost, speed, and QA rigor, and can handle sequences plus sensor fusion. Startups should prioritize fast onboarding, clear review workflows, and exports that plug into their training stack. -

How scalable are LiDAR annotation platforms for large datasets?

Scalability depends on more than UI performance. Look for workload routing, multi-level review, sampling-based QA, and dashboards for throughput and rework. A scalable platform keeps consistency stable as labelers, scenes, and class rules grow. -

Do LiDAR annotation platforms support real-time annotation?

Most LiDAR annotation platforms are built for offline labeling, not true real-time annotation. Some can label streaming-like sequences quickly, but production workflows typically involve batch ingestion, review loops, and dataset versioning rather than live labeling. -

What file formats do LiDAR annotation platforms accept?

Most platforms support common point cloud formats and sequence inputs, but exact support varies by vendor. Confirm the formats you use for point clouds, calibration, and synchronized sensor data, plus how they handle timestamps and frame alignment. -

How do I evaluate LiDAR annotation quality beyond “spot checks”?

Track disagreement rate, rework rate, review pass rate, and class-wise error patterns. For sequences, also monitor ID switches and temporal consistency issues. These metrics show drift early and keep QA measurable. -

Should we outsource LiDAR annotation or build an in-house labeling team?

Outsource when volume is high, timelines are tight, or you need trained labelers fast. Build in-house when specs change frequently and feedback loops must be instant. Many teams use a hybrid model.

.webp)

.png)